About Time

TL;DR

- There are two challenges with time in computer systems: measuring time, and representing time.

- The former problem is rightfully considered too hard for software engineers, and should best be left to the generally much cleverer hardware people.

- The latter problem is often a source of cleverness, which can be avoided by just storing seconds + nanos since epoch.

About Time

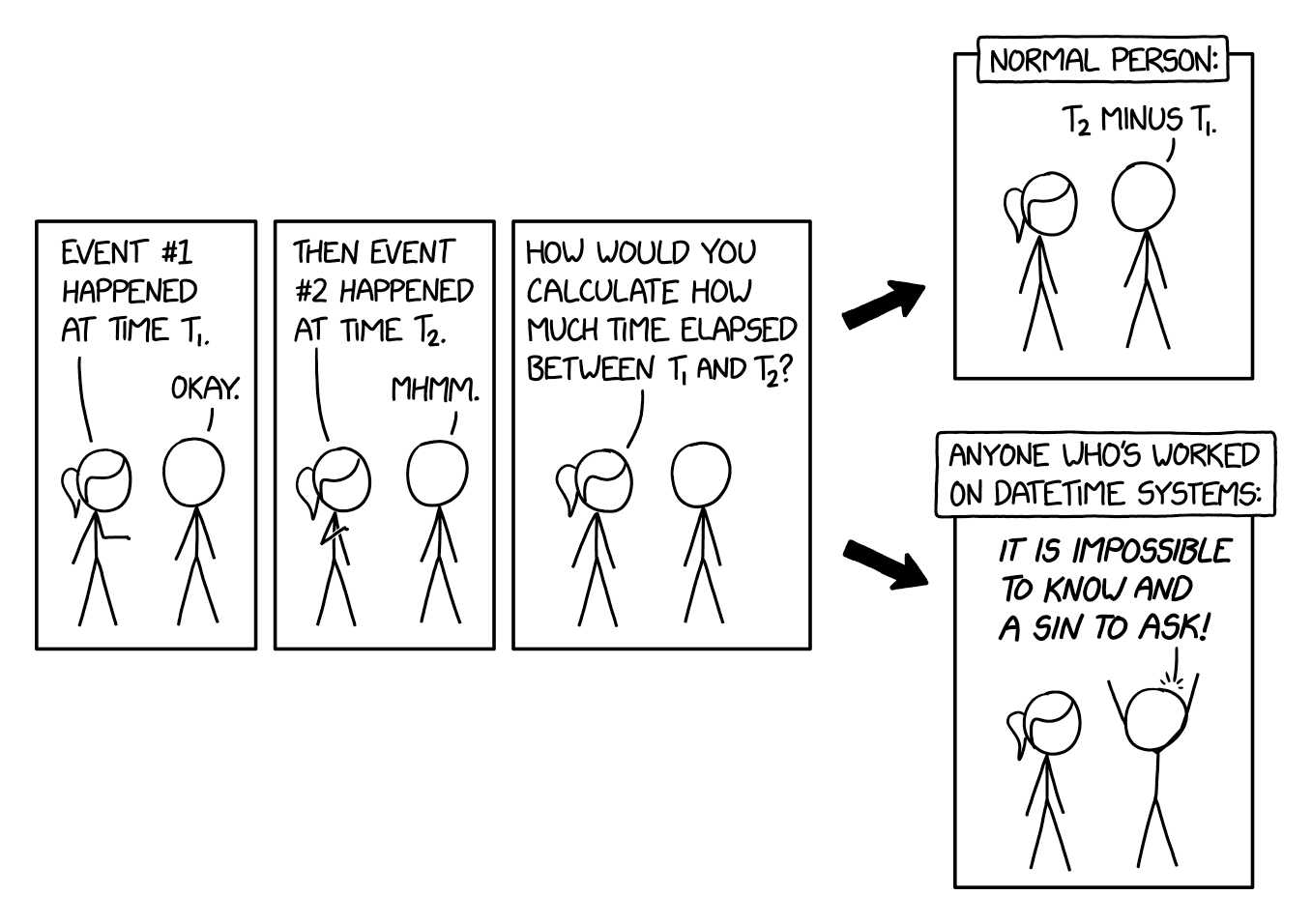

This is funny, because it's true. But, worry not! For once, we get to act like the normal people: T2 - T1 is a reasonable answer and works for the vast majority of our use cases.

Storing Time

Here is the official friso.lol guideline on storing an instant in time: use a struct that stores the number of seconds since epoch, and the number of nanoseconds within the second. A struct can be any value based type provided by your language (data class, record, struct, case class, etc.). When communicating between systems, absolutely do the same thing. Avoid ISO-8601.

This approach, while simple, raises some questions.

What Is Epoch?

Some people might tell you that time runs out, but this is not true. Time is infinite. Inherent to the infinite nature of time, we can not express an instant in time in absolute terms. An instant is always relative to another instant.

To represent an instant relative to another instant, we need a time scale. Basically, a sort of (one-dimensional) coordinate system for time. A coordinate systems needs an origin, where the value is zero, which is the epoch of the time scale.

To further appreciate the usefulness of a time scale, consider that on a time scale each instant has a value representation as well as a lexical representation. The value representation denotes an instant using the duration of time passed since epoch, whereas the lexical representation denotes an instant in a human readable way often anchored to civil time (such as ISO8601).

As an aside: the value representation of time on a time scale is continuous. Any duration can always be split into two durations of half the length. However, due to the digital nature of our computing devices, time is discretised. And the world has decided that nanosecond granularity ought to be enough for anybody.

One such time scale is Unix Time, which has its epoch sit at midnight at the start of the year 1970. So, value representation 0 seconds, 0 nanoseconds, has lexical representation 1970-01-01 00:00:00.000000. Add 60 seconds, and the lexical representation advances by 1 minute. Add 86400 seconds, and the lexical representation advances by 1 day. And so on.

Being the ubiquitous time scale for many systems, Unix Time is probably the reason that when we say epoch, we tend to instantly think about January 1st, 1970. But there are many more; see this list of Epochs.

How Long Is a Long Day?

Furthermore, a time scale typically defines the duration of one second. This is a very unfortunate necessity for time scales. If all we cared about was the value representation, we would be just fine with one defition of a second. But since we equally care about the lexical representation, we need a way to go from seconds to days, and back. This is problematic, because not all days have the same duration (Earth rotation variances, solar things, astro-something, cosmos, blah, blah).

There are two popular approaches to this problem:

- every second is equally long, but the number of seconds per day is variable

- not every second is equally long, but the number of seconds per day is fixed

Approach number 1 introduces leap seconds. Approach number 2 requires a clock that stretches out the available number of seconds across the day. Ideally in a well defined manner. This is called Leap Smear.

The benefit of the first approach is correctness at the expense of complexity introduced by non-uniform days. The benefit of the second approach is that it hides the complexity in the clock implementation, and everything on top can treat days as uniform. The second approach has become preferred in most software. Quite probably because it does not require a leap second lookup table for all operations.

There is also one slightly less popular approach to this problem, which is to introduce a discontinuity in the time scale itself. Unfortunately, Unix Time does this. When a leap second is introduced in UTC at the end of the day, the value representation of Unix Time after the leap second will jump back to the start of the leap second to introduce another second with the same representation. Very confusing.

To make matters more interesting, time scales build on top of other time scales. Unix Time builds on top of UTC, which in turn builds on top TAI. That last one is based on SI seconds, which have a strict definition. As a result, TAI does not care about calendar days, so it is now 37 seconds ahead of UTC. Altogether, these are all just different ways of describing how to handle the variance in the length of a day in SI seconds.

Now What?

So, when we store our time as seconds + nanos since epoch and we care to know the elapsed time between two events. What is the problem with doing T2 - T1? Well, the problem is that T1 and T2 could come from different systems using different time scales. For example, Java uses UTC-SLS for one segment of its own Java Time Scale (yes, that's a thing). Google and AWS services use a different, non-standardised leap smear strategy. Throw Unix Time in the mix, and there could be entire seconds missing from the value representation. Then, when T1 and T2 are sufficiently far apart, the duration that we get from the calculation could be off by a whole whopping… 27 seconds. That's it. Less than half a minute. Big deal!

For most software that reports durations in minutes or less granular, this difference is immaterial. For most software that reports durations in seconds or more granular, time tends to be obtained from a single system clock, operating on one time scale, and the duration will be just fine. As long as you are not going to space, embrace T2 - T1.

What About Time Zones?

Time zones are a construct used for civil time, mapping a 24 hour period to the cycle of the day in a way that sunrise falls somewhere between 5am and 8am. There are many time zones, and they change regularly for practical, political, and other non-obvious reasons.

Technically, a time zone is a definition of how to establish the local UTC offset for a given instant. The instant is important here, since the UTC offset as well as daylight savings rules can change over the years. You can not map a time zone to a UTC offset, without specifying when in history the time zone is applied.

Because of this, the only useful way to store timezone information, is to store the IANA time zone ID in addition to your instant (on whatever time scale you have). An alternative in rare cases is to store a geolocation, which can be translated into an IANA time zone ID.

Why Not ISO-8601

It is sometimes believed that ISO dates are clever, because they represent both the instant and the timezone of where the instant was recorded. This is false, and misguided. It is false, because ISO dates encode a UTC offset, but not a time zone. These are not the same thing. It is misguided, because there is nothing explicit about the UTC offset in an ISO date being the local UTC offset at the site of recording.

What you do get from ISO dates are:

- Log files recorded on different machines sorting non-chronologically together.

- API users who inadvertently add the wrong UTC offset to a local time.

- Parse errors from systems not implementing the full specification (i.e. have a faulty regex somewhere).

- A false sense of human readability (aside: I propose a distinction between human parseable, and human readable).

Recap

In conclusion:

- Use a struct with seconds + nanos since epoch for representing time.

- If the local time of the event matters, add the IANA timezone ID to your struct.

- A UTC offset is not a timezone.

- If the accuracy of durations reported by your software matters at the sub-minute level, be sure to control and standardise both your clock, and your time scale.

In case you are nonetheless concerned about the correctness of your handling of time in your code, familiarise yourself with these excellent Falsehoods Programmers Believe About Time.